SQL Server 2012/2014 AlwaysOn Availability Groups:

- Article Summary

- Part 1 – AlwaysOn Introduction

- Part 2 – AlwaysOn Design

- Part 3 – Install and Configure Windows Server 2012 R2 in Core mode

- Part 4 – WSFC Cluster Creation

- Part 5 – Install SQL Core on Windows Core Server

- Part 6 – AlwaysOn Availability Groups Creation

- Part 7 – AlwaysOn Availability Groups Creation (Advanced, with dedicated Replication Network)

- Part 8 – Methods to add Database on Availability Groups (SCOM Example)

- Part 9 – AlwaysOn Availability Groups – PowerShell Monitoring

- ANNEX (Part 6/7) – Manage SQL Endpoint

This part cover the configuration of the WSFC Cluster required for the AlwaysOn Availability Group.

WSFC Node Updates [Note]

In addition to WSUS update, you have to check Hotfix/Rollup for WSFC Cluster:

| WS2012 R2 |

Or on this article from the TechNet Blog “The troubleshooters and problem”

|

| WS2012 |

|

| WS2008 R2 SP1 |

|

AlwaysOn – Prerequisites

Install Windows Features

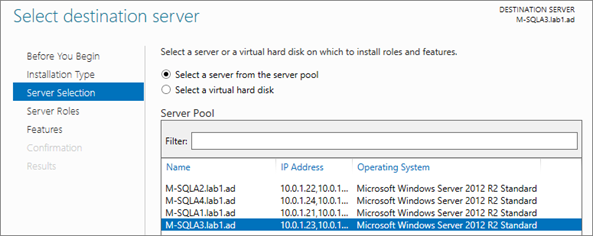

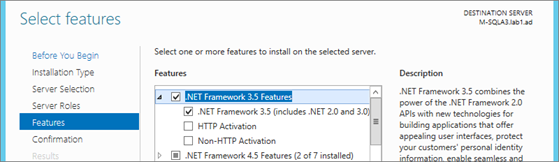

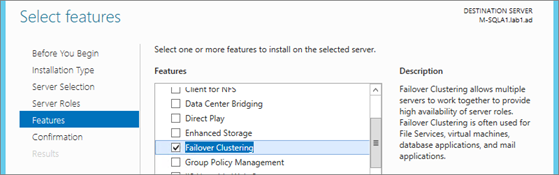

On all nodes install “.NET 3.5” and “Failover Clustering” features:

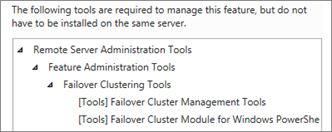

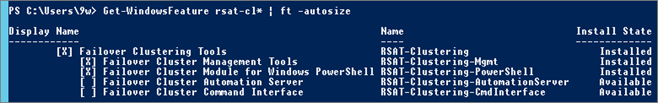

Add “Failover Clustering Administration Tools”:

Note: Only “Failover Cluster Module for PowerShell” is available on core node.

Or installation via PowerShell:

|

PowerShell |

|

| # For remote install add: -Computer <Hostname> Install-WindowsFeature Net-Framework-Core -source V:\sources\sxs Install-WindowsFeature Failover-Clustering -IncludeManagementTools# ‘-IncludeManagementTools’ includes:# * Failover Cluster Management Tools # * Failover Vluster Module for Windows PowerShell |

|

WSFC Cluster – Creation

During the WSFC Cluster creation a CNO (Cluster Name Object) is created in Active Directory. This operation requires specific AD right.

There are three options:

- Give Active Directory right “Create Object” to the SQL Admin account which you plan to create the WSFC Cluster.

- Add temporarily the SQL Admin Account to the “Domain Admins” group (I use this for the lab)

- Pre-stage the CNO before installation (see TechNet)

Prestage Cluster Computer Objects in Active Directory Domain Services:

https://technet.microsoft.com/en-us/library/dn466519.aspx

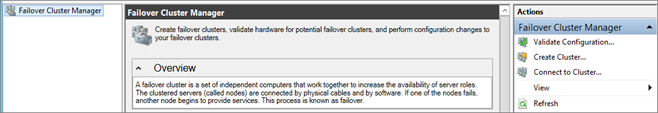

From M-SQLA1, launch “Failover Cluster Manager” console:

Launch “Validate Configuration”:

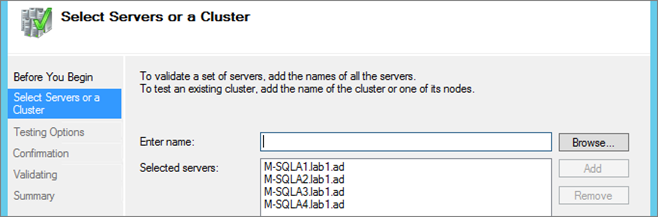

Add all SQL AlwaysOn nodes:

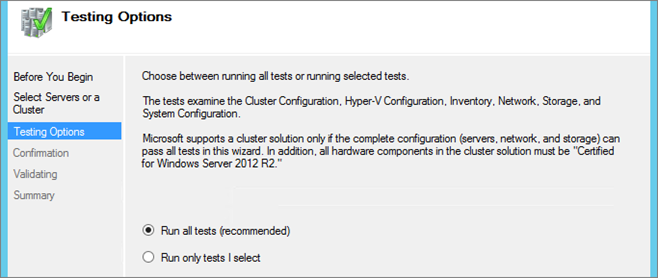

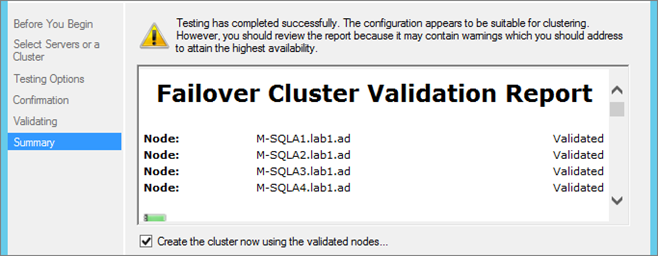

Do the Validation tests:

Check “Create the cluster now…”:

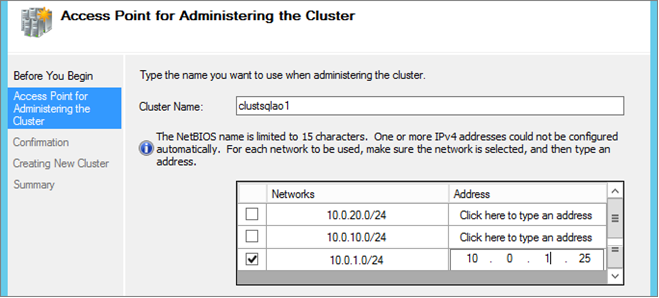

Enter Cluster name and add an IP (on the Public VLAN):

Note: Cluster Name and IP are used for management and Cluster operations. These resources are known as “Cluster Core Resources”.

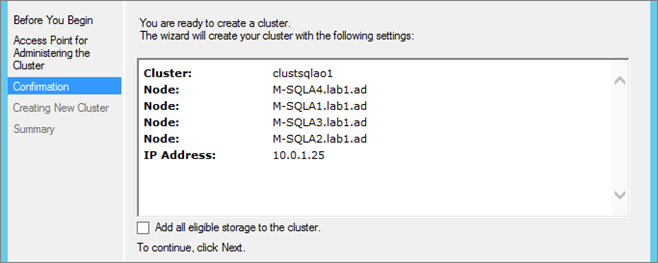

Create Cluster:

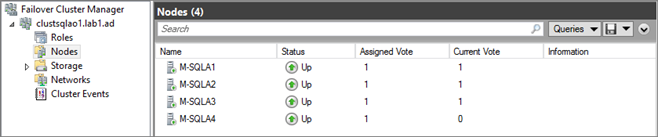

Check nodes status:

WSFC Cluster – Configuration

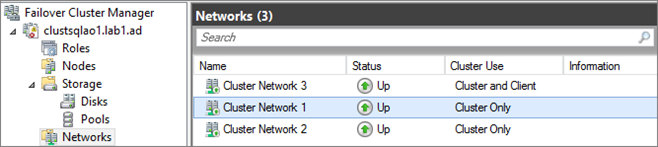

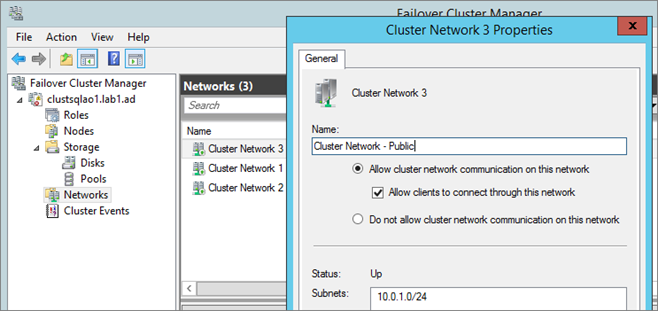

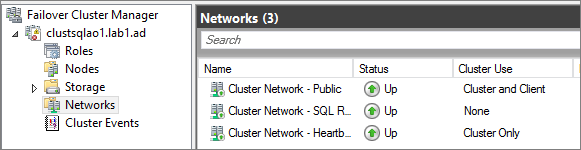

Configure Networks

Rename each Cluster Network and configure Communications allowed.

| Network | Communications |

| Public | Cluster and Client |

| Heartbeat | Cluster Only |

| Replication | None |

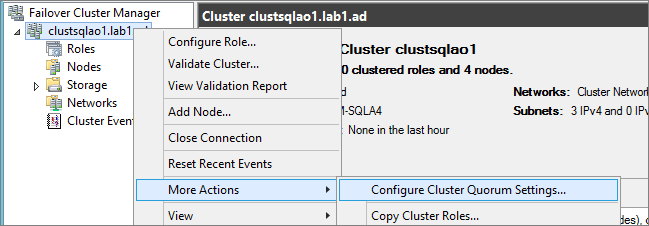

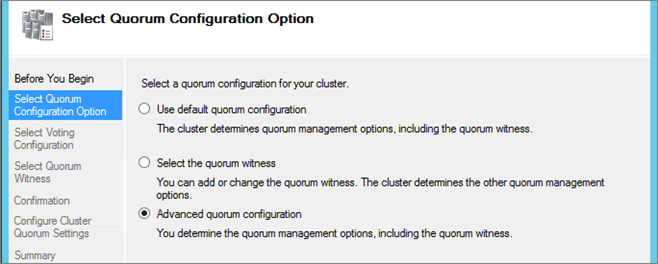

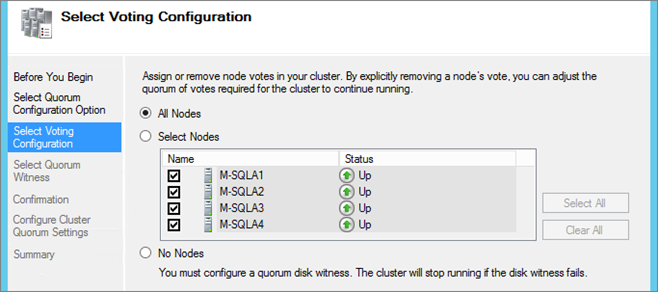

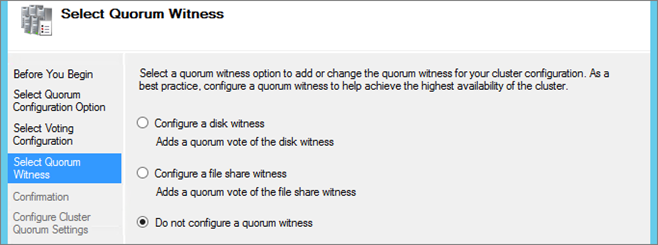

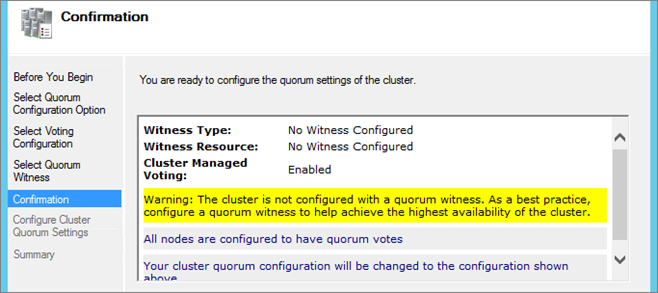

Configure Quorum

In this example I do not use a Witness. I use only the Dynamic Quorum mode (enabled by default).

Be careful, because Dynamic Quorum works fine as long as the nodes go down one by one. If multiples nodes fail at the same time (e.g.: the loss of a datacenter), the Dynamic Quorum may have malfunction (no recalculate vote). I will do further tests in a future article.

So for Production environment it’s recommended to use a Witness.

Configure Quorum:

| NOTE | Create WSFC Cluster through PowerShell |

|

PowerShell |

|

| # Run “Validate Configuration and Open Report $clustreportPath = “D:\Reports\TestClusterReport_xxx-xx-xx” Test-Cluster -ReportName $clustreportPath Invoke-Item $clustreportPath # Create Cluster $ClustVIP = “10.0.1.25” $ClusterName = “CN=clustsqlao1,OU=Clusters,DC=lab1,DC=ad” write-host “Create Cluster: $($ClusterName)” New-Cluster -Name $ClusterName -StaticAddress $ClustVIP -NoStorage -Node “M-SQLA1”,“M-SQLA2”,“M-SQLA3”,“M-SQLA4” #Check Cluster Creation $clust = Get-Cluster 2> $null if ($clust -eq $null) { Write-Warning “Cluster not found” } else {Get-ClusterNode} |

|

Quorum Configuration Note

Dynamic Quorum is a new feature came with Windows Server 2012.

When Dynamic Quorum is enabled (by default) , the cluster dynamically manages the vote assignment to nodes, based on the state of each node. Votes are automatically removed from nodes that leave active cluster membership, and a vote is automatically assigned when a node rejoins the cluster.

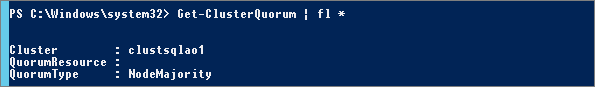

Get the Quorum Type:

|

PowerShell |

|

| Get-ClusterQuorum | fl * | |

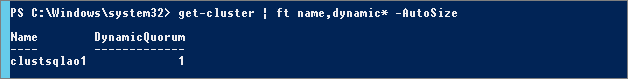

Check if Dynamic Quorum is enabled:

|

PowerShell |

|

| Get-Cluster | ft name,dynamic* -Autosize | |

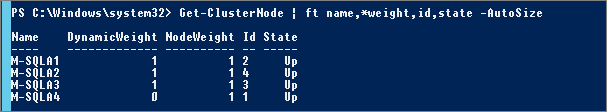

View the “Dynamic Node Weight” status for nodes:

|

PowerShell |

|

| Get-ClusterNode | ft name,*weight,id,state -Autosize | |

| Property | Description |

| DynamicWeight | This is the node weight manage by “Dynamic Quorum”. If a node is failed, DynamicWeight = 0 |

| NodeWeight | This is the “hard” configuration, for example you can decided that nodes on a DR site will not have a vote, so in this case NodeWeight = 0. |

For more information about Quorum Configuration see TechNet Article:

Configure and Manage the Quorum in a Windows Server 2012 Failover Cluster

https://technet.microsoft.com/fr-fr/library/jj612870.aspx

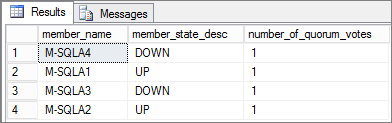

| NOTE | Retrieve “NodeWeight” from SQL (Transact-SQL) |

| SELECT member_name, member_state_desc, number_of_quorum_votes FROM sys.dm_hadr_cluster_members;

|

|

Guest Cluster Virtualization Configuration

This part is for Hyper-V Hypervisor, I will add later configuration for VMware vSphere.

Hyper-V – Heartbeat configuration

On Hyper-V, during a Live Migration of a node, the Cluster resources (in our case the AAG) can failover to another node. The configuration bellow is for tuning WSFC Cluster on a multi-site environment or on a virtualized environment

What’s happen:

WSFC nodes send heartbeat packets to other nodes of the cluster. The default port for cluster communication is TCP\UDP 3343.

If a node does not receive a response from another node for a specified period of time, the cluster removes the node from cluster membership. By default, a cluster node is considered down if it does not respond within 5 seconds. In this case another node will initiate failover

At the end of a Live Migration, the VM is stopped on the original Hyper-V host and begins to start on the destination host. During this short time, the VM node may exceed the Heartbeat threshold and so a failover starts. (Note: With the 2012 R2 version, “Live migration” has been improved. Migration is faster and after several migration tests of SQL node the Availability Group have not failover).

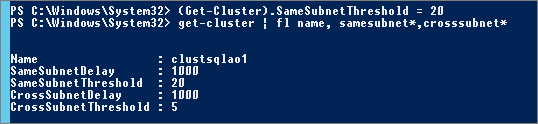

To prevent this problem, you have to increase the Threshold (there are two parameters, one for Cluster on same subnet, and one for cross-subnet):

| Parameter | Default Value | Range | Configuration needed |

| SameSubnetThreshold | 5 heartbeats | 5 <> 120 heartbeats | 20 heartbeats |

| CrossSubnetThreshold | 5 heartbeats | 5 <> 120 heartbeats | 20 heartbeats |

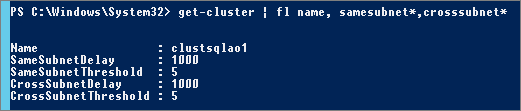

Retrieve Cluster configuration

get-cluster | fl name, samesubnet*,crosssubnet*

Change values (in my lab same subnet):

(Get-Cluster).SameSubnetThreshold = 20

For information:

You can also configure Heartbeat Frequency.

By default, regardless of subnet configuration, heartbeat frequency is once every second (1000 milliseconds). So with the configuration above, we have 20 x 1000 = 20 seconds before node becomes unavailable for the cluster (This is the Microsoft recommendation for Hyper-V).

Heartbeat frequency:

| Parameter | Default Value | Range |

| SameSubnetDelay | 1000 ms | 250 <> 2000 ms |

| CrossSubnetDelay | 1000 ms | 250 <> 4000 ms |

Hyper-V – Place SQL VM Nodes on Different Physical hosts

For guest clustering, if you have multiple Hyper-V hosts in cluster you have to host VM on different physical hosts. To prevent that the VMs do not end up on the same host (during Live Migration or PRO operation) you have to configure the Anti-Affinity rules on Hyper-V (AntiAffinityClassNames Paramerter).

View Anti-Affinity Rules

Get-ClusterGroup | Select AntiAffinityClassNames

On the first Hyper-V Cluster:

$ColAntiAffinity = New-Object System.Collections.Specialized.StringCollection

$ColAntiAffinity.Add(“GuestClust-AlwaysOn”)

(Get-ClusterGroup -Name M-SQLA1).AntiAffinityClassNames = $ColAntiAffinity

(Get-ClusterGroup -Name M-SQLA2).AntiAffinityClassNames = $ColAntiAffinity

Repeat the operation on the second Hyper-V Cluster for M-SQLA3 and M-SQLA4 nodes.

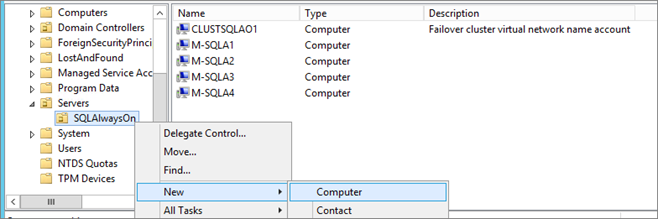

Prestage – Availability Group Listener

Active Directory

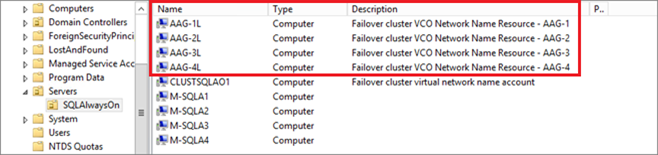

The Availability Group Listeners are register as Cluster Resources and so in Active Directory as VCO (Virtual Computer Object). As the CNO (Cluster Name Object), we have to prestage these VCO and give the appropriate permissions.

With a Domain Admin account, launch the “Active Directory Users and Computers“ console

Click on the “View” menu and select “Advanced Features”.

Go to the OU where there is the AlwaysOn cluster CNO, and create a new computer:

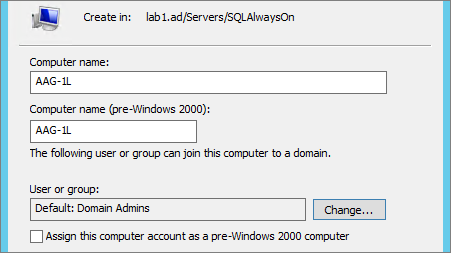

Enter the Listener name:

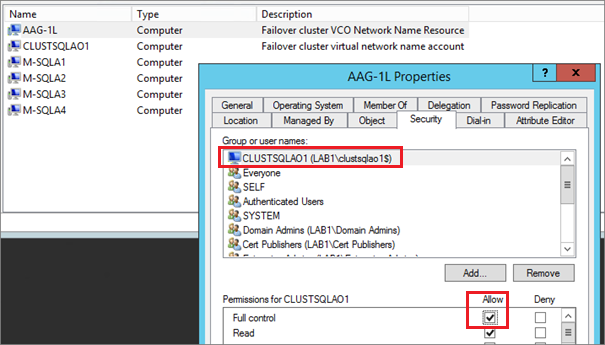

Edit Properties of the created VCO, add a description, go to the “Security” tab and add the AlwaysOn cluster CNO (here: clustsqlao1), under Permissions allow “Full Control”

Repeat operation for all Listeners:

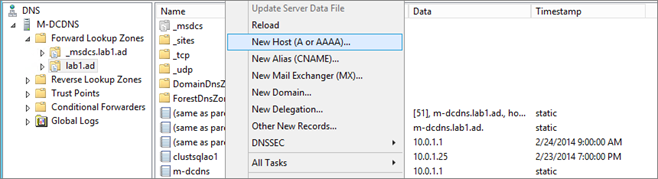

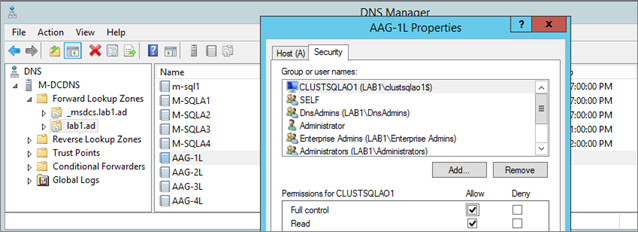

DNS (Integrated in AD)

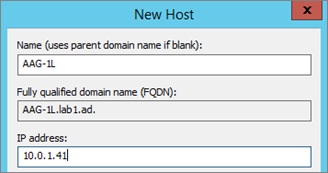

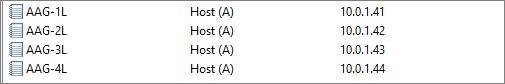

Launch the DNS console, go to you domain zone, and create an new Host (A or AAAA):

Enter the Listener Name and the IP Address:

Repeat the operation for all Listener :

Allow the Cluster Name Object (CNO) to update DNS records:

Note: This configuration must be done DNS is integrated to Active Directory.

Edit the properties of record created previously:

Go to the “Security” tab and add the CNO, give the Full Control permissions:

Repeat the operation on all Listeners DNS records.

Next

The next part covers the installation of SQL Server on Core node (automated through unattened INI file): AlwaysOn Availability Group – Part 5 – Install SQL Core Server

Nice article.

I am currently setting up a server Windows 2012r2 non-shared disk cluster to have a node at each data centre and use SQL AG’s to do the replication. I have VMware 5.1u1. I have an issue in presenting the disks to the guest os, they will not come on line unless I make them RDM’s, if I use datastore disks it just gives errors.

Is there a secret to using non-shared disk from VMware that I am missing?

Thank you! I’m not sure to understand, by « on line » you talk at the windows level or at the cluster level? For AAG, disks should not be configured as Cluster resources. Each SQL node must have its own “local” disks. You can use virtual disk (VMDK) or RDM LUN but for Production it is not recommended to use virtual disks (VMDK) for database and transaction log files (it’s ok for a mock-up), so you need to present different RDM disks or to each node (not the same to multiple nodes). What is the error with VMDK ?

This is the best resource I’ve ever seen outlining this process, thanks.

Quick question, I configured the heartbeat network as ‘Cluster only’ yet still got a message that it could not bring the cluster online because the heartbeat network was not configured to allow client access. Did I miss a step?

Each node in the cluster has a static IP and FQDN in the same subnet 10.0.1.0. The IP is assigned to the NIC on the node. Where do you configure the cluster IP 10.0.1.25, does it have a NIC, where is this NIC?

I think the answer to my question is found. From the arp table, I saw the same MAC (machine) has 2 different IPs. .121-3 are nodes 1-3 while .120 was assigned to the cluster.

192.168.1.120 00-15-5d-01-1c-23 dynamic

192.168.1.121 00-15-5d-01-1c-21 dynamic

192.168.1.122 00-15-5d-01-1c-22 dynamic

192.168.1.123 00-15-5d-01-1c-23 dynamic