Thanks to Windows Server 2016, we will able to implement HyperConverged infrastructure. This marketing word means that storage, network and compute components will be installed locally on servers. So in this solution, there is no need SAN. Instead the Storage Spaces Direct solution will be used (for further information please read this topic). In this topic I’ll describe how to deploy a HyperConverged infrastructure on Nano Servers in Windows Server 2016 TP4. Almost all the configuration will be done with Powershell.

You said HyperConverged?

HyperConverged infrastructure is based on servers where disks are Direct-Attached Storage (DAS) connected internally or by using a JBOD tray. Each server (at least four to implement Storage Space Direct) has their own storage devices. So there are no shared disks or JBODs.

HyperConverged infrastructure is based on known features as Failover Cluster, Cluster Shared Volume, and Storage Space. However, because storage devices are not shared between each node, we need something more to create a Clustered Storage Space with DAS devices. This is called Storage Space Direct. Below you can find the Storage Spaces Direct stack.

On network side, Storage Space Direct leverage at least 10G networks RDMA capable. This is because replications that occur though Software Storage Bus need low latency that RDMA provides.

Requirements

Because I have not enough hardware in my lab, I deploy the hyperconverged infrastructure to virtual machines. Now that we have nested Hyper-V, we can do this J. To follow this topic, you need these requirements:

- Windows Server 2016 Technical Preview 4 ISO

- A Hyper-V host installed with Windows Server 2016 Technical Preview 4

- This script to enable nested Hyper-V

Create Nano Server VHDX image

To create a Nano Server image, you have to copy Convert-WindowsImage.ps1 and NanoServerImageGenerator.psm1 to a folder.

Then I have written a short script to create the four Nano Server VHDX :

Import-Module C:\temp\NanoServer\NanoServerImageGenerator.psm1

# Nano Server Name

$NanoServers = "HCNano01", "HCNano02", "HCNano03", "HCNano04"

$IP = 170

Foreach ($HCNano in $NanoServers){

New-NanoServerImage -MediaPath "D:" `

-BasePath C:\temp\NanoServer\Base `

-TargetPath $("C:\temp\NanoServer\Images\" + $HCNano + ".vhdx")`

-ComputerName $HCNano `

-InterfaceNameOrIndex Ethernet `

-Ipv4Address 10.10.0.$IP `

-Ipv4SubnetMask 255.255.255.0 `

-Ipv4Gateway 10.10.0.1 `

-DomainName int.homecloud.net `

-Clustering `

-GuestDrivers `

-Storage `

-Packages Microsoft-NanoServer-Compute-Package, Microsoft-Windows-Server-SCVMM-Compute-Package, Microsoft-Windows-Server-SCVMM-Package `

-EnableRemoteManagementPort

$IP++

}

This script creates a Nano Server VHDX image for each machine called HCNano01, HCNano02, HCNano03 and HCNano04. I set also the domain and the IP address. I add cluster feature, guest drivers, storage and Hyper-V features and SCVMM agent if you need to add your cluster to VMM later. For more information about the Nano Server image creation, please read this topic.

Then I launch this script. Sorry, you can’t take a coffee while the VHDX are created because you have to enter manually administrator password for each image J

Once the script is finished, you should have four VHDX as below.

Ok, now we have our four images. The next step is the Virtual Machine creation.

Virtual Machine configuration

Create Virtual Machines

To create virtual machines, connect to your Hyper-V Host running on Windows Server 2016 TP4. To create and configure Virtual Machines I have written this script:

$NanoServers = "HCNano01", "HCNano02", "HCNano03", "HCNano04"

Foreach ($HCNano in $NanoServers){

New-VM -Name $HCNano `

-Path D: `

-NoVHD `

-Generation 2 `

-MemoryStartupBytes 8GB `

-SwitchName LS_VMWorkload

Set-VM -Name $HCNano `

-ProcessorCount 4 `

-StaticMemory

Add-VMNetworkAdapter -VMName $HCNano -SwitchName LS_VMWorkload

Set-VMNetworkAdapter -VMName $HCNano -MacAddressSpoofing On -AllowTeaming On

}

This script creates four virtual machines called HCNano01, HCNano02, HCNano03 and HCNano04. These Virtual Machines will be stored in D:\ and no VHDx will be mounted. These Virtual Machines are Gen2 with 4 vCPU and 8GB of static memory. Then I add a second network adapter to make a teaming inside the Virtual Machines (with Switch Embedded Teaming). So I enable Mac Spoofing and the Teaming on Virtual Network Adapters. Below you can find the result.

Now, copy each Nano Server VHDX image inside its related Virtual Machine folder. Below an example:

Then I run this script to add this VHDX to each virtual machine:

$NanoServers = "HCNano01", "HCNano02", "HCNano03", "HCNano04"

Foreach ($HCNano in $NanoServers){

# Add the virtual disk to VMs

Add-VMHardDiskDrive -VMName $HCNano `

-Path $("D:\" + $HCNano + "\" + $HCNano + ".vhdx")

$VirtualDrive = Get-VMHardDiskDrive -VMName $HCNano `

-ControllerNumber 0

# Change the boot order

Set-VMFirmware -VMName $HCNano -FirstBootDevice $VirtualDrive

}

This script adds the VHDX to each Virtual Machine and change the boot device order to boot hard drive first. Ok our virtual machines are ready. Now we have to add storage for Storage Space usage.

Create virtual disks for storage

Now I’m going to create 10 disks for each virtual machine. These disks are dynamic and their sizes are 10GB. (oh come-on, it’s a lab :p).

$NanoServers = "HCNano01", "HCNano02", "HCNano03", "HCNano04"

Foreach ($HCNano in $NanoServers){

$NbrVHDX = 10

For ($i = 1 ;$i -le $NbrVHDX; $i++){

New-VHD -Path $("D:\" + $HCNano + "\" + $HCNano + "-Disk" + $i + ".vhdx") `

-SizeBytes 10GB `

-Dynamic

Add-VMHardDiskDrive -VMName $HCNano `

-Path $("D:\" + $HCNano + "\" + $HCNano + "-Disk" + $i + ".vhdx")

}

Start-VM -Name $HCNano

}

This script creates 10 virtual disks for each virtual machine and mount them to these VMs. Then each Virtual Machine is started.

At this moment, we have four VMs with hardware well configured. So now we have to configure the software part.

Hyper-V host side configuration

Enable trunk on Nano Server virtual network adapters

Each Nano Server will have four different virtual NICs with four different subnets and four different VLAN. These virtual NICs are connected to the Hyper-V host virtual NICs (yes nested virtualization is really Inception). Because we need four different VLANs we have to configure trunk on Virtual NICs on the Hyper-V Host. By running the Get-VMNetworkAdapterVLAN Powershell command you should have something like that:

For each Network Adapter that you have created in Virtual Machines, you have the allowed VLAN and the Mode (trunk, Access or untagged). It’s depending on the configuration that you made in the virtual machine. But regarding your HyperConverged Virtual Machines, we need three allowed VLAN and so trunk mode. To configure Virtual network Adapter to trunk mode, I run the below script on my Hyper-V host:

Set-VMNetworkAdapterVlan -VMName HCNano01 -Trunk -NativeVlanId 0 -AllowedVlanIdList "10,100,101,102" Set-VMNetworkAdapterVlan -VMName HCNano02 -Trunk -NativeVlanId 0 -AllowedVlanIdList "10,100,101,102" Set-VMNetworkAdapterVlan -VMName HCNano03 -Trunk -NativeVlanId 0 -AllowedVlanIdList "10,100,101,102" Set-VMNetworkAdapterVlan -VMName HCNano04 -Trunk -NativeVlanId 0 -AllowedVlanIdList "10,100,101,102"

So for each network adapter, I allow VLAN 10, 100, 101 and 102. The NativeVlanId is set to 0 to leave untagged all other traffics. After running the above script, I run again Get-VMNetworkAdapterVLAN and I have the below configuration.

Enable nested Hyper-V

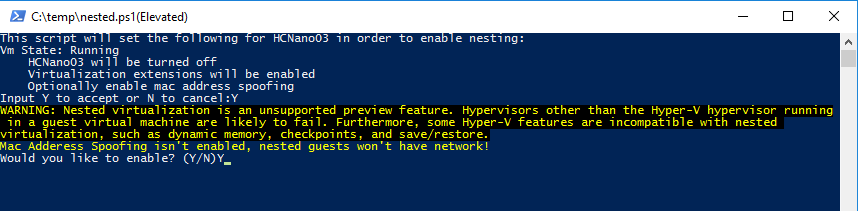

Now we have to enable nested Hyper-V to be able to run Hyper-V inside Hyper-V (oh my god, I have a headache. Where is Dicaprio?). Microsoft provides a script to enable nested Hyper-V. You can find it here. I have copied the script to a file called nested.ps1. Next just run nested.ps1 –VMName <VMName> for each VM. Then you should have something as below. Then the VM will be stopped.

Then restart the four Nano Servers VMs.

Configure Nano Server system

To configure Nano Server, I will sometime leverage Powershell Direct. To use it, just run Enter-PSSession –VMName <VMName> -Credential <VMName>\Administrator. Once you are connected to the system, you can configure it. So because I’m a lazy guy, I have written one script to configure each server. The below script change the time zone, create a Switch Embedded Teaming, set the IP Addresses, enable RDMA and install the features needed. This time, when you have run this script, you can take a coffee J.

$Credential = Get-Credential

$IPMgmt = 170

$IPSto = 10

$IPLM = 170

$IPClust = 170

$NanoServers = "HCNano01", "HCNano02", "HCNano03", "HCNano04"

Foreach ($HCNano in $NanoServers){

Enter-PSSession –VMName $HCNano –Credential $Credential

#Change the TimeZone to Romance Standard Time

tzutil /s "Romance Standard Time"

# Create a Swtich Embedded Teaming with both Network Adapters

New-VMSwitch -Name Management -EnableEmbeddedTeaming $True -AllowManagementOS $True -NetAdapterName "Ethernet", "Ethernet 2"

# Add Virtual NICs for Storage, Cluster and Live-Migration

Add-VMNetworkAdapter -ManagementOS -Name "Storage" -SwitchName Management

Add-VMNetworkAdapter -ManagementOS -Name "Cluster" -SwitchName Management

Add-VMNetworkAdapter -ManagementOS -Name "LiveMigration" -SwitchName Management

# Set the IP Address for each virtual NIC

netsh interface ip set address "vEthernet (Management)" static 10.10.0.$IPMgmt 255.255.255.0 10.10.0.1

netsh interface ip set dns "vEthernet (Management)" static 10.10.0.20

netsh interface ip set address "vEthernet (Storage)" static 10.10.102.$IPSto 255.255.255.192

netsh interface ip set address "vEthernet (LiveMigration)" static 10.10.101.$IPLM 255.255.255.0

netsh interface ip set address "vEthernet (Cluster)" static 10.10.100.$IPClust 255.255.255.0

# Enable RDMA on Storage and Live-Migration

Enable-NetAdapterRDMA -Name "vEthernet (Storage)"

Enable-NetAdapterRDMA -Name "vEthernet (LiveMigration)"

# Add DNS on Management vNIC

netsh interface ip set dns "vEthernet (Management)" static 10.10.0.20

Exit

# Install File Server and Storage Replica feature

install-WindowsFeature FS-FileServer, Storage-Replica -ComputerName $HCNano

# Restarting the VM

Restart-VM –Name $HCNano

$IPMgmt++

$IPSto++

$IPLM++

$IPClust++

}

At the end, I have 4 virtual NICs as you can see with Get-NetAdapter command:

Then I have a Switch Embedded Teaming called Management composed of two Network Adapters.

To finish I have well activated RDMA on Storage and Live-Migration Networks.

So the network is ready on each node. Now we have just to create the cluster J

Create and configure the cluster

First of all, I run a Test-Cluster to verify if my nodes are ready to be part of a Storage Space Direct Cluster. So I run the below command:

Test-Cluster -Node "HCNano01", "HCNano02", "HCNano03", "HCNano04" -Include "Storage Spaces Direct", Inventory,Network,"System Configuration"

I have a warning in the network configuration because I use private networks for storage and cluster and so there is no ping possible across these both networks. So I ignore this Warning. So let’s start the cluster creation:

New-Cluster –Name HCCluster –Node HCNode01, HCNode02, HCNode03, HCNode04 –NoStorage –StaticAddress 10.10.0.174

Then the cluster is formed:

Now we can configure the Networks. I start by changing the name and set the Storage Network’s role by Cluster and Client.

(Get-ClusterNetwork -Cluster HCCluster -Name "Cluster Network 1").Name="Management" (Get-ClusterNetwork -Cluster HCCluster -Name "Cluster Network 2").Name="Storage" (Get-ClusterNetwork -Cluster HCCluster -Name "Cluster Network 3").Name="Cluster" (Get-ClusterNetwork -Cluster HCCluster -Name "Cluster Network 4").Name="Live-Migration" (Get-ClusterNetwork -Cluster HCCluster -Name "Storage").Role="ClusterAndClient"

Then I change the Live-Migration settings in order that the cluster use Live-Migration network for … Live-Migration usage. I don’t use Powershell for this step because It is easier to make it by using the GUI.

Then I configure a witness by using the new feature in Windows Server 2016: the Cloud Witness.

Set-ClusterQuorum -Cluster HCCluster -CloudWitness -AccountName homecloud -AccessKey <AccessKey>

Then I enable Storage Spaces Direct on my Cluster. Please read this topic to use the right command to enable the Storage Spaces Direct. It can change related to storage devices you use (NVMe + SSD, or SSD + HDD and so on).

Ok, the cluster is now ready. We can create some Storage Spaces for our Virtual Machines.

Create Storage Spaces

Because I run commands remotely, we have first to connect to the storage provider with Register-StorageSubSystem PowerShell cmdlet.

Register-StorageSubSystem –ComputerName HCCluster.int.homecloud.net –ProviderName *

Now when I run Get-StorageSubSystem as below, I can see the storage provider on the cluster.

Now I verify if disks are well recognized and can be added to a Storage Pool. As you can see below, I have my 40 disks.

Next I create a Storage Pool with all disks available.

New-StoragePool -StorageSubSystemName HCCluster.int.homecloud.net `

-FriendlyName VMPool `

-WriteCacheSizeDefault 0 `

-ProvisioningTypeDefault Fixed `

-ResiliencySettingNameDefault Mirror `

-PhysicalDisk (Get-StorageSubSystem -Name HCCluster.int.homecloud.net | Get-PhysicalDisk)

If I come back in the Failover Cluster GUI, I have a new Storage Pool called VMPool.

Then I create two volumes in mirroring. One has 4 columns and the other 2 columns. Each volume is formatted in ReFS and their sizes are 50GB.

New-Volume -StoragePoolFriendlyName VMPool `

-FriendlyName VMStorage01 `

-NumberOfColumns 4 `

-PhysicalDiskRedundancy 2 `

-FileSystem CSVFS_REFS `

–Size 50GB

New-Volume -StoragePoolFriendlyName VMPool `

-FriendlyName VMStorage02 `

-NumberOfColumns 2 `

-PhysicalDiskRedundancy 2 `

-FileSystem CSVFS_REFS `

–Size 50GB

Now I have two Cluster Virtual Disks and they are mounted on C:\ClusterStorage\VolumeX.

Host VMs

So I create a Virtual Machines and I use C:\ClusterStorage to host VMs files.

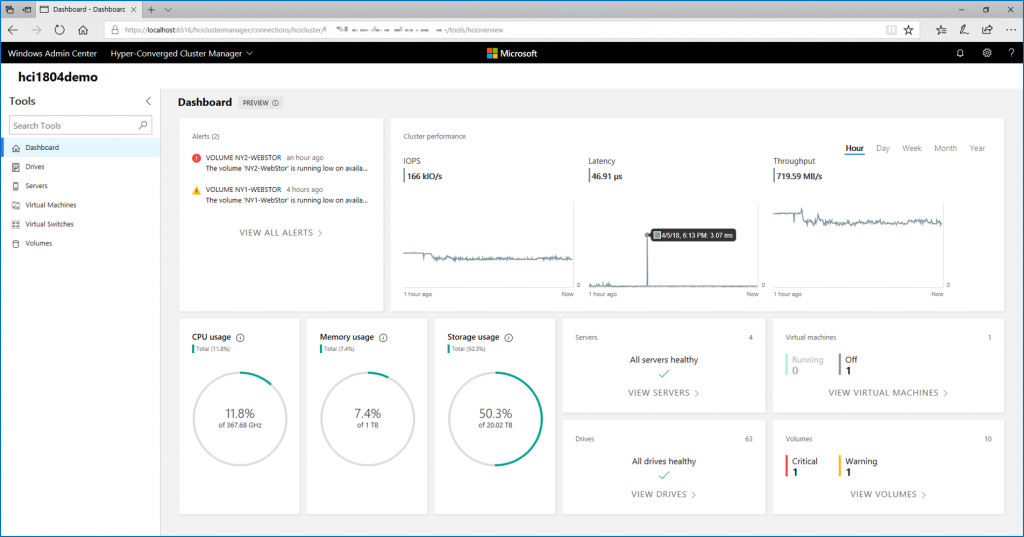

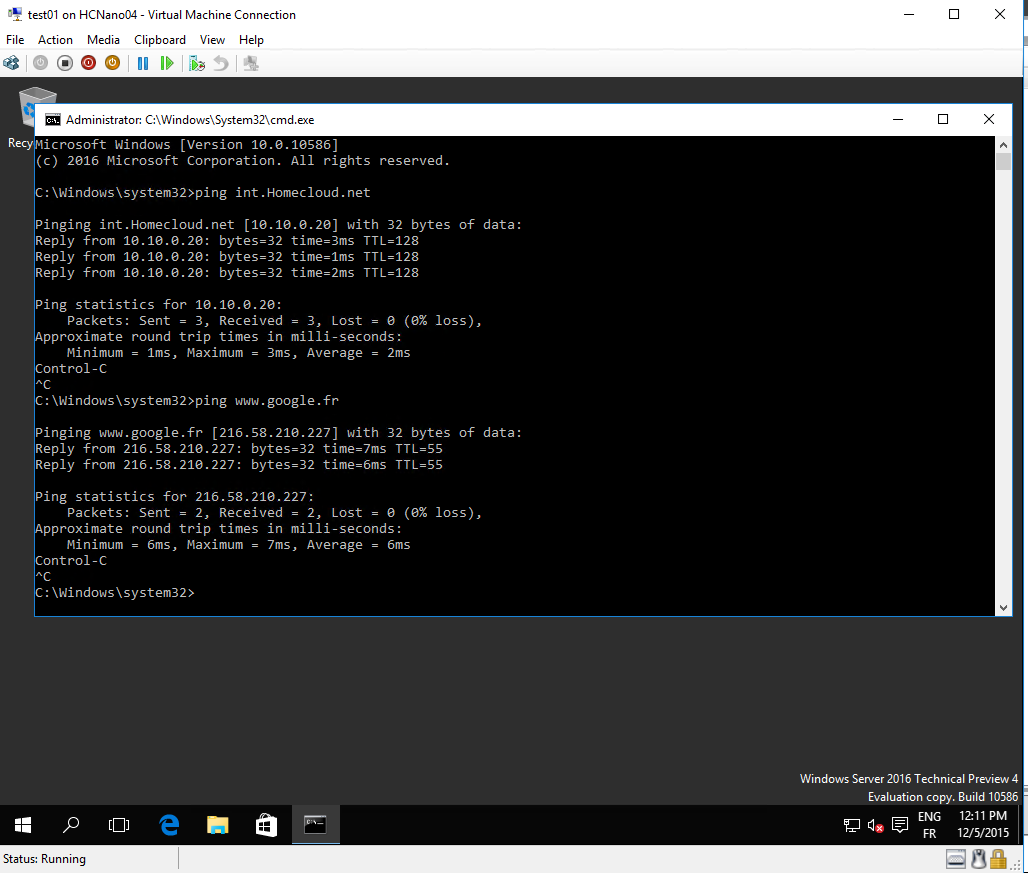

Then I try to run the virtual machines. If it is running, all is working, included nested Hyper-V J.

I try a ping on my Active Directory and on Google. It’s working oh yeah.

Conclusion

HyperConverged is a great flexible solution. As you have seen above it is not so hard to install this solution. However, I think this solution needs a real hard work to size it. It is necessary to have strong knowledge on Storage Space, networks and Hyper-V. In my opinion, Nano Servers are a great solution for this kind of solution because of the small footprint on disks and compute resources. Moreover, they are less exposed to Microsoft Update and so to reboot. To finish, Nano Servers reboot quickly and so we can enter in the trend “Fail Hard but fail fast”. I hope that Microsoft will not kill its HyperConverged solution with Windows Server 2016 license model …